It is becoming abundantly clear that the minimum wage has gone the way of the buggy whip and that a new de-facto entry-level wage of $15 per hour is becoming the national standard in the private sector.

The shock to the economy unleashed by the pandemic and the response by workers have radically transformed the wage-earning landscape. And this is taking place in the private sector, not in federal, state or local governments.

Middle market firms that comprise the real economy are adapting to this transformation by paying higher wages, improving working conditions, offering more flexibility to workers—or all of the above.

At the same time, many firms are looking to substitute technology for labor to offset the rising costs. But this dynamic will remain fluid as the natural tension between labor and capital evolves.

In the end, the era of surplus labor and the setting of entry-level wages well below the cost of living appear to be in the rearview mirror.

A short history of monopsony

The pandemic and the economic shutdown allowed employees the opportunity to rethink their working conditions. In many cases, workers realized that their jobs were just not worth their time.

And because of the rise of online shopping and, most important, Amazon’s $15 starting wage—the private sector has found itself competing for a dwindling supply of low-wage employees after four decades of what economists call monopsony power.

At first, employers were shocked that they could not attract quality workers at wage scales they were used to paying. But then they realized that the landscape had shifted, and that the de facto minimum wage had become $15 an hour. Walmart Inc., McDonald’s and Chipotle Mexican Grill have already announced their intention to match Amazon’s starting salaries, and small businesses will be hard-pressed not to follow.

Middle market firms that comprise the real economy are adapting to the transformation in the labor market by paying higher wages, improving working conditions, offering more flexibility to workers–or all of the above.

Evolution of the minimum wage

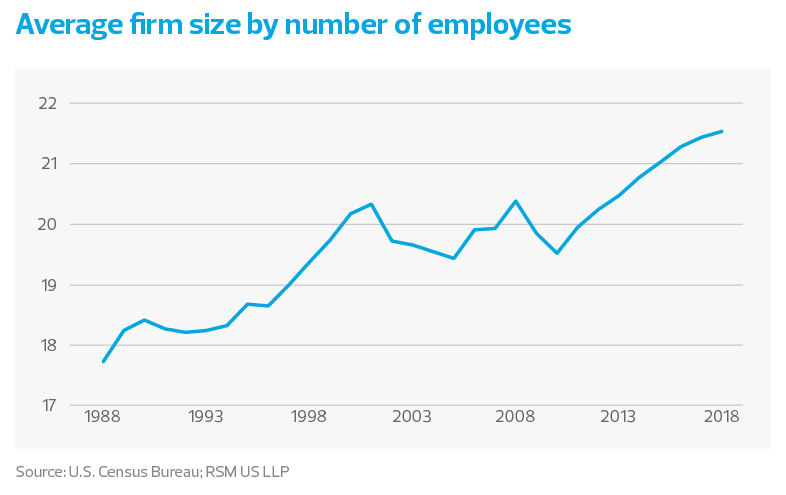

Monopsony is the consolidation and non-competitiveness of employment choices. The earliest examples were the coal mining towns of West Virginia. But in the modern era, Walmart Inc. in the South, Rite Aid in New England, and McDonald’s everywhere have become examples.

As each of those establishments moved into areas that once had scores of small businesses in downtown commercial districts employment choices declined from many to just a few as those small businesses closed. And because the minimum wage didn’t keep up with the rising cost of living, the working poor increasingly had fewer choices for work. In many cases, the choice was to join the multinational company at wages that ever so steadily lost buying power, or perhaps go on public assistance.

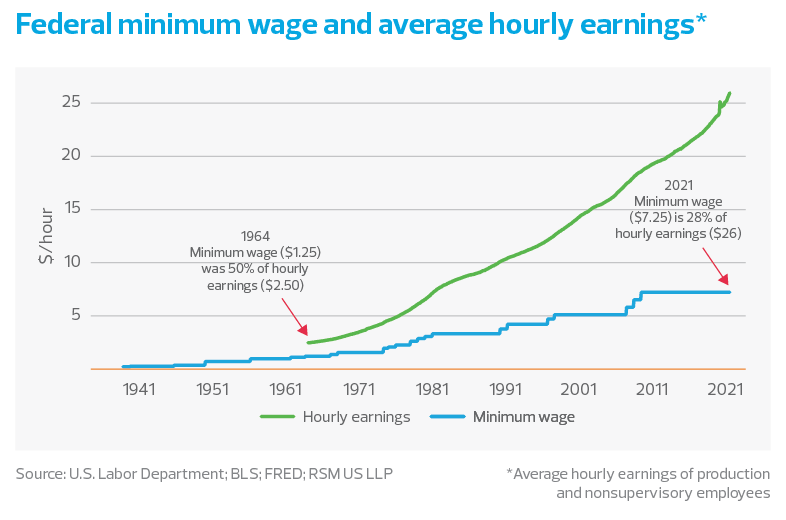

That was not the original intent of the minimum wage. Introduced in 1933, the minimum wage was set at 50% of the average rate of 50 cents per hour. It was an efficient way to bring working families out of the poverty of the Great Depression.

By 1964, at the height of U.S. industrial power, the minimum wage remained at 50% of the average hourly rate of $2.50.

Over the next five decades, however, increases in the minimum wage did not keep up with average hourly earnings. By 2019, before the pandemic, the minimum wage had fallen to 30% of average hourly earnings, while the purchasing power of the dollar had declined by nearly 90% since 1964.

In effect, a stagnant minimum wage acted as an artificial ceiling on wages. As manufacturing jobs began disappearing from the American economy in the 1980s, employers faced less competition for workers. And because low-income families don’t have the means to move to higher-paying areas, there were plenty of willing workers as long as their new jobs were paying anything above minimum wage.